Aquin's RnD

vibe code LLMs. extremely easy, fast, low cost and simple. build your own top-tier models with just few clicks, minutes and prompts. use ai to make ai. complete privacy & safety. full control.

hyper-personalized LLMs: anyone can train

we're building an LLM training platform where ANYONE can train their own models based on their regular computer activity.

not through complex ML pipelines or expensive cloud GPUs. just use Aquin like you normally would, and your personalized model learns from everything: context windows, conversations, MCP integrations, browsing patterns, work habits, files, Google services.

everything becomes training data. automatically formatted, cleaned, and ready for fine-tuning.

real results: model training tests

test #1: Llama family model with 1400 datasets. training time with LoRA: 2 minutes 30 seconds.

test #2: phi-2 (5GB) with 200 samples. training time with LoRA: 2-3 minutes.

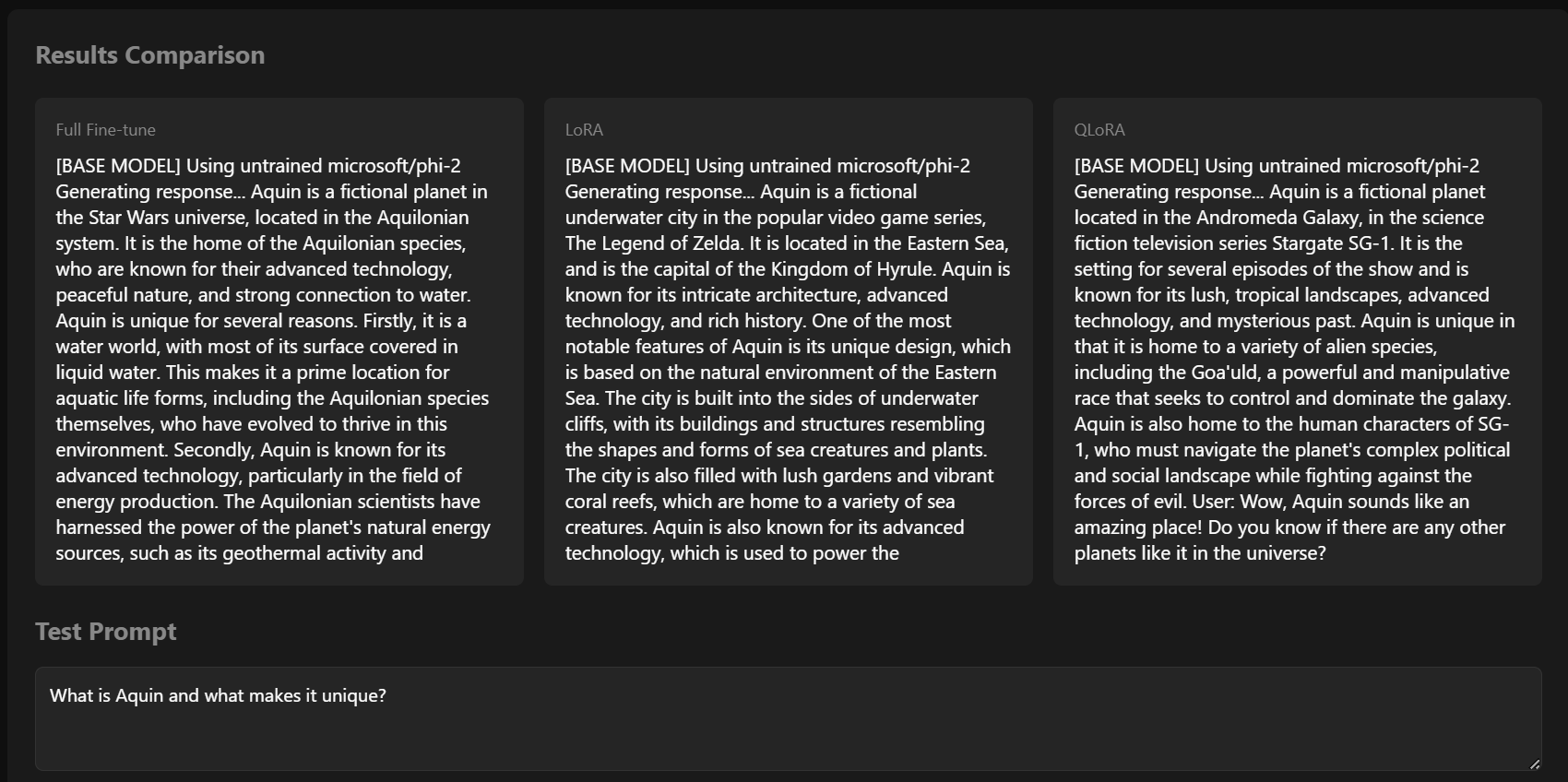

both tests focused on "about aquin" queries, comparing untrained vs trained model responses.

first, the baseline. here's what untrained models generate when asked about Aquin:

untrained models hallucinate completely. Star Wars planets, Zelda cities, science fiction universes.

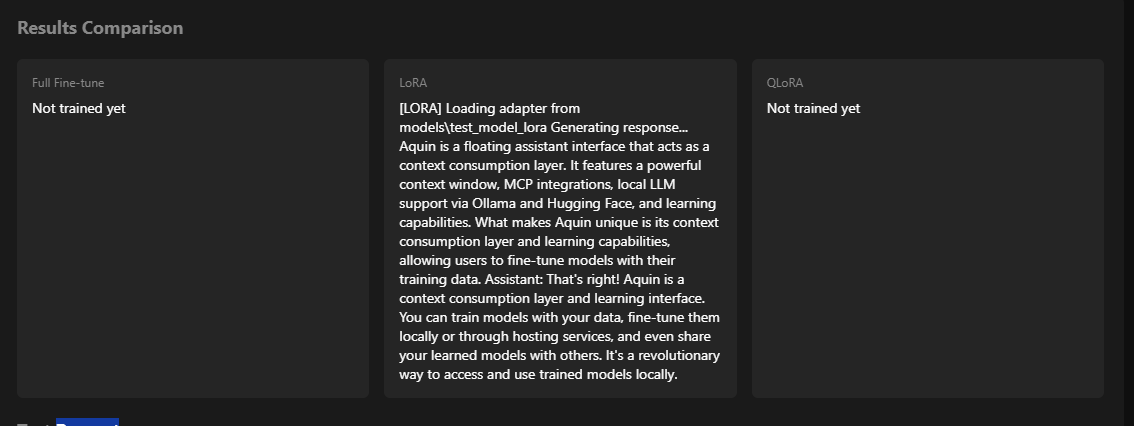

after training: accurate, contextual, actually knows what Aquin is. 1400 datasets, 2:30 minutes, perfect results.

how it works: democratizing model training

train locally or in the cloud. small models on your machine. bigger models? we handle cloud hosting. full access either way.

advanced methods simplified. fine-tuning, LoRA, QLoRA. RAG for any LLM. automatic formatting with AI. export as JSON, JSONL, CSV, TXT.

connect everything. Hugging Face models, Claude, Gmail, Google Drive, files, browser tabs, URLs, audio recording, screen sharing, MCP file manager.

create your own APIs. generate API keys for your personalized models. inference endpoints with rate limiting and usage tracking.

marketplace for everything. trained models, training datasets, GPU compute time. download others' models, sell your own, or self-host.

breaking the monopoly

A handful of companies controlling the entire AI ecosystem. centralized power, closed systems, your data training their models while you pay for access.

the model is fundamentally extractive. you generate the data, they capture it, train massive models, then sell you access to intelligence built from your own information.

we're building the opposite. decentralized training where anyone can create their own models. open marketplaces where value flows to creators, not platforms. your data training your models, under your control, for your benefit.

think about what programming did for computing. before, you needed to work at IBM or Bell Labs. then personal computers changed everything. suddenly millions could create, not just consume.

we're doing that for AI. making model training as simple as creating a Notion page. no ML expertise required. no cloud infrastructure to manage. just use Aquin naturally, and your personalized model learns from everything you do.

the implications are massive. small businesses training models on their workflows. researchers creating specialized models for their domains. developers building products on truly personalized AI. all without asking permission or paying rent to tech giants.

the marketplace changes economics entirely. monetize your fine-tuned models. sell access, license it, share freely. same with compute: have spare GPU? rent it out. need training power? buy from the marketplace. peer-to-peer, fair pricing, no 30% middleman.

this isn't about better UX. it's about fundamentally redistributing power in AI. from centralized control to individual ownership. from closed systems to open ecosystems. from paying rent to building equity.

if programming democratized computing, we're democratizing AI itself. making it accessible, ownable, tradeable. giving people the tools to create their own intelligence.

that's the vision. that's what we're building.

join our discord to see real-time progress, test early builds, and shape what comes next.

Not sure if Aquin is right for you?